Facial Recognition Technology [FRT] has become increasingly prevalent recently. It is in places from airports to shopping centres and used for many purposes, including law enforcement.

While the technology may prevent some crimes and assist in solving others, it poses serious privacy risks.

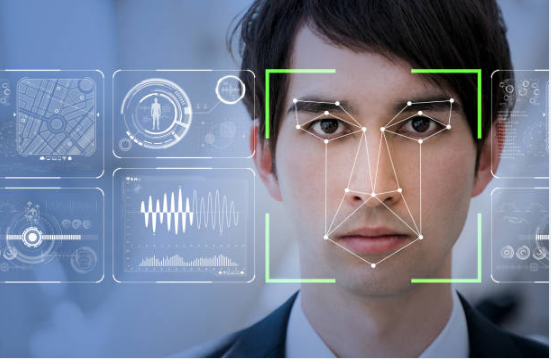

FRT uses a database of photos to identify people in security photos and videos. The key identification feature is the geometry of a face, particularly the distance between a person's eyes and the distance from their forehead to their chin. This creates a ‘facial signature’, a mathematical formula that is stored in a database of known faces for purposes of identification.

Facial recognition equipment collects and uses personal data including biometric data, which under our Data Protection (Jersey) Law 2018 [DPJL] constitutes special category data. The law treats special category data as more sensitive and requires that it receive greater protection. This is because it poses significant risks to a person’s fundamental rights and freedoms, such as unlawful discrimination.

Information Commissioner, Dr Jay Fedorak highlights ‘FRT processing involves a high risk to the rights and freedoms of natural persons. Studies have demonstrated that it is prone to error, including false positives. And these errors are greater for individuals of different ethnic backgrounds.’

FRT is subject to all the requirements of the DPJL for high risk processing of special category data, which includes;

Given these risks and legal requirements, Data Controllers should consult with the Jersey Office of the Information Commissioner [JOIC] before embarking on the processing of high volume special category data.

FRT, like all forms of video surveillance should be an avenue of last resort, to address serious problems where less intrusive alternatives have failed, and the benefits of processing clearly outweigh the risk of harm to data subjects.

Dr Fedorak echoes UK Information Commissioner, Ms Denham in calling on Government to ‘introduce at the earliest opportunity a statutory binding code of practice to provide further safe guards that address the specific issues arising from the use of biometric technology such as FRT. This would further inform competent authorities within the law enforcement sector about how and when they can use FRT in public spaces to comply with the data protection law’

Read: UK Information Commissioner’s Opinion: The use of live facial recognition technology by law enforcement in public places 31 October 2019.